Navigation World Models

In vision-based robotics, Navigation World Models (NWMs) offer a unified framework for perception, planning, and control by either learning policies directly or by predicting future observations to inform trajectory optimization. Their goal is to help mobile agents reach targets, explore unknown spaces, and adapt to novel environments from real-time visual inputs. We mainly discuss the following four state-of-the-art NWMs: GNM [1], which trains a cross-robot navigation policy on multi-robot datasets; ViNT [2], a Transformer-based NWM for image-goal navigation with zero-shot generalizablility; NoMaD [3], which unifies goal-conditioned and undirected exploration in a single diffusion-based policy; and NWM [4], a large-scale Conditional Diffusion Transformer world model that simulates video trajectories for MPC motion planning. In this study, we discuss the core techniques they employ, and we also find common challenges: heavy reliance on large, diverse datasets; limited planning horizons; computational overhead from diffusion decoding; and the high model and compute demands of video prediction. This survey provides a detailed comparison of these strengths and weaknesses.

- Introduction

- Navigation World Models

- Architectures of NWMs

- Limitations of NWMs

- Conclusion and Future Work

- References

Introduction

Robot navigation is difficult because the robot must have good vision, plan ahead, and adapt to new environments. Traditional methods like reinforcement learning run into difficulties when the robot can only access part of the scene. RL also fail if the lighting changes suddenly or it faces moving objects like pedestrians. These limitations make it difficult to learn a generalizable navigation policy from RL.

Navigation World Models (NWMs) address these challenges by leveraging large-scale web and video datasets to learn forward‐prediction models and goal‐conditioned policies. By generating plausible future trajectories and action sequences from raw egocentric frames, NWMs provide agents with the ability to imagine outcomes and plan around obstacles, significantly reducing reliance on task‐specific data collection.

NWMs tackle navigation difficulties through several core mechanisms: they employ latent‐space prediction to simulate environment dynamics, diffusion or transformer architectures to model multimodal action distributions, and goal‐masking or embedding techniques to condition planning on desired targets. However, the following problems still challenge NWM in real-world deployment. Training on web data demands immense computation and careful data curation; transformer-based NWM often require prior information about the environment for long‐range tasks; and video‐prediction models may struggle with increasing errors over long-term rollouts.

In this work, we conduct a comparison of four leading NWM approaches: GNM [1], ViNT [2], NoMaD [3], and Conditional Diffusion Transformers [4]. We evaluate their training pipeline, inference strategies, and performance trade‐offs.

Navigation World Models

Navigation World Models learn an internal representation of how a robot’s view changes in response to its own movements. We formalize this process as follows. At time step \(i\), the robot observes an image \(x_i \in \mathcal{X}\) and takes a navigation action \(a_i\), such as the speed and steering control. The model first encodes each image into a latent vector

\[s_i = \mathrm{enc}_\theta(x_i),\]where \(\mathrm{enc}_\theta: \mathcal{X} \to \mathbb{R}^d\) is a learned encoder with parameters \(\theta\). To capture the historical information, we collect the past \(m\) latent representations into a tuple

\[\mathbf{s}_i = \{s_{i-m+1}, s_{i-m+2}, \ldots, s_i\}.\]Given this history \(\mathbf{s}_i\) and action \(a_i\), the world model \(F_\theta\) predicts the next latent state:

\[s_{i+1} \sim F_\theta\bigl(s_{i+1} \mid \mathbf{s}_i, a_i \bigr).\]At inference time, these samples \(\{s_{i+1}\}\) are scored by how well they align with a predefined navigation target, and the highest-scoring action is executed by the robot in each step.

To build this representation, they first collect large amounts of first-person video from robots or humans moving through varied environments, along with the low-level controls (such as forward speed or turn angle) that produced each frame. When a navigation goal is provided—whether as a target image, a GPS waypoint, or another embedding—the model also takes that goal signal as input. During training, each video frame is passed through an encoder to produce a compact feature vector. A Transformer or diffusion network then learns either to predict the next feature vector given the current one and the chosen action (world-prediction models) or to generate the right sequence of future actions given the current feature and goal (policy models). Some methods augment this process with sparse maps or depth readings to strengthen geometric grounding, but all share the same core pipeline of encoding images, learning a predictive model with actions and goals, and freezing the learned weights for deployment.

At inference time, the robot captures its current view and converts it, along with the goal descriptor, into feature vectors. The world model then “imagines” several possible futures by rolling out its predictions—either as a chain of feature-space frames or as full action sequences—and assigns each a score based on how closely the imagined endpoint matches the goal and how safe the path appears. The robot executes the first step of the highest-scoring plan, then repeats the process from its new vantage point. This single mechanism supports a variety of tasks without building explicit maps: it can drive toward an image-specified location, explore unknown rooms by favoring novel trajectories, follow lists of waypoints, and avoid unexpected obstacles. By unifying perception and planning in a learned predictive model, NWMs adapt to new scenes and robot bodies, leverage unstructured video data, and plan over longer horizons than purely reactive policies.

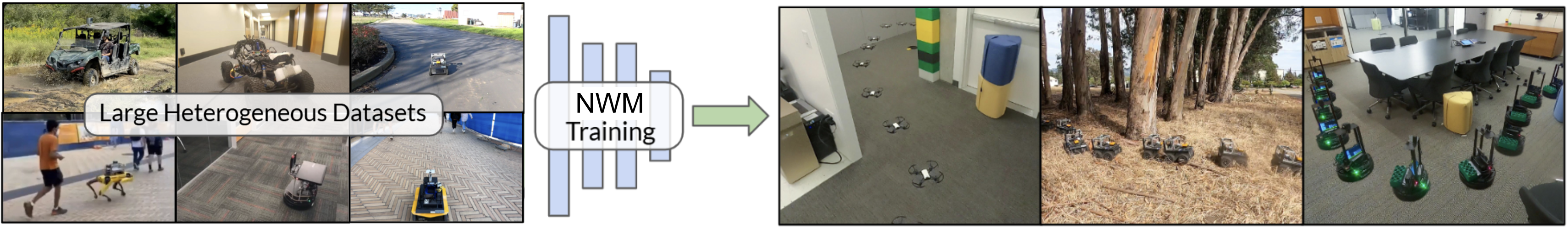

Navigation World Models work reliably in both indoor and outdoor settings, allowing robots to move through rooms, streets, and outdoor environments with the same navigation policy. By training on heterogeneous video datasets, a single NWM can even learn navigation behaviors that apply to different robots without any additional per-robot data collection or fine-tuning.

NWMs apply to both indoor and outdoor environments in real-world deployment.

NWMs apply naturally to large-scale exploration and mapping tasks. For kilometer-scale exploration, the world model can plan long sequences of actions by chaining its imagined rollouts, allowing the robot to traverse vast, unstructured areas without getting stuck or repeatedly visiting the same spots. In route-guided navigation, the model conditions on a high-level path or route description—such as a graph of waypoints or a coarse GPS trace—and refines each segment through its predictive planning, ensuring smooth and safe progress from one landmark to the next. For coverage mapping, the model scores candidate trajectories not only by goal proximity but also by how much novel area they reveal, enabling the robot to systematically sweep a region to build a detailed map or occupancy estimate.

NWMs trained on a large scale of datasets can learn a navigation policy controlling various robots without collecting new robot-specific data.

Architectures of NWMs

We summarize the architectures of NWMs in this section and the key techniques used in these methods: GNM [1], ViNT [2], NoMaD [3], and the Conditional Diffusion Transformer [4].

General Navigation Model (GNM) builds a single navigation policy based on CNN-based Mobilenetv2 [5] that works for many robots without any fine-tuning. Instead of directly generating low-level control signals, GNM uses relative waypoints \(p(x,y)\) and a yaw change \(\psi\) to represent mid-level actions. These mid-level actions capture the robot’s intent at each step and therefore do not depend on any specific robot’s structure or dynamics.

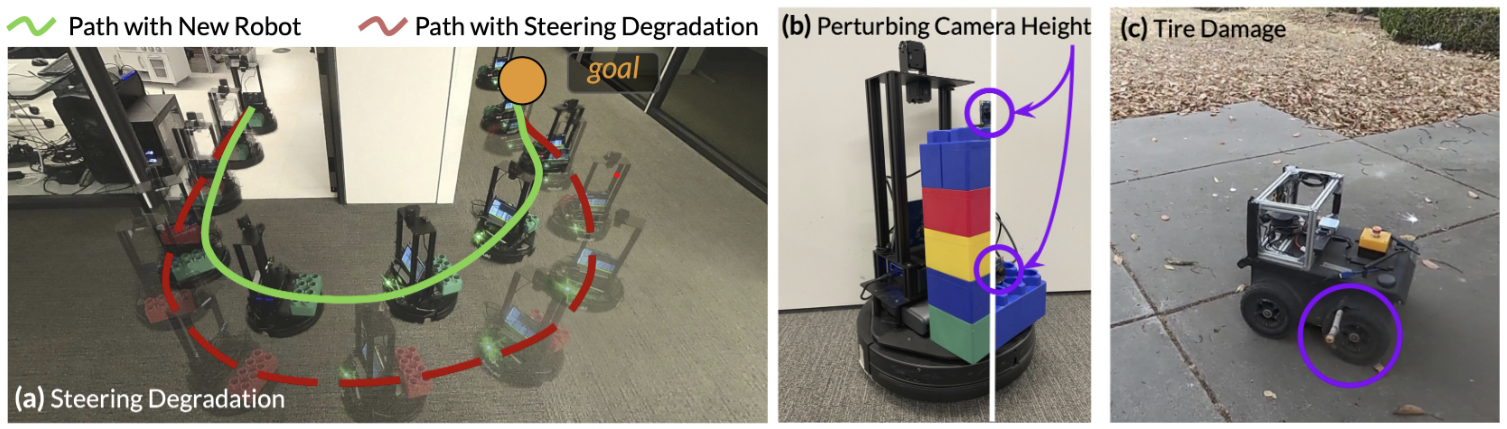

To handle differences in dynamics (such as maximum speed, turning radius, or body dimensions) and enable real-world deployment, GNM learns an embodiment context that adapts the mid-level actions to each robot’s low-level control data. This embodiment context encodes how a robot’s observations change in response to small action variations. During training, data from multiple robots are mixed: GNM first learns general motion patterns for navigation, and then the embodiment context fine-tunes those patterns to each platform’s unique characteristics and computes the actual control signals.

When a new robot appears—or when an existing robot’s dynamics change—it runs a brief calibration routine to infer its embodiment context from a small amount of test-time data. After that, the same control policy can be applied zero-shot to the new robot or environment. This combination of standardized mid-level actions, a per-robot context embedding, and quick calibration enables GNM to deliver a universal navigation policy.

GNM can achieve zero-shot deployment on new robots. The learned navigation policy is also robust to robot degradation or physical damages.

Visual Navigation Transformer (ViNT) extends the mid-level action idea by having a single Transformer predict not only the next waypoint but also the remaining distance to the goal. During training, ViNT learns to map each encoded view and goal embedding to a pair \((p_i, d_i)\), where \(p_i\) is the next relative waypoint and \(d_i\) is the predicted distance. At inference, the goal is turned into a learned prompt token that steers the model’s attention toward the target.

Long-distance navigation is handled by chaining these waypoint–distance predictions: The agent iteratively update the relative waypoint and the distance prediction in each navigation step. This iterative estimation of remaining distance serves as an implicit self‐correction mechanism: whenever the predicted distance does not decrease as expected, ViNT automatically adjusts its subsequent waypoint predictions to compensate and maintain accurate long‐range progress. At inference, the given target image or GPS coordinate is converted into a learned prompt token that steers the Transformer’s attention.

For very large scales (kilometers), ViNT can be plugged into a lightweight graph planner or diffusion-based subgoal generator: the high-level planner proposes coarse waypoints every few hundred meters, and ViNT refines each segment with its Transformer-based model to ensure stable task progress. This combination of prompt-based goal conditioning and iterative refinement helps ViNT navigate over long distances in real time without heavy mapping or fine-tuning.

ViNT handles long-range navigation task with zero-shot generalizability.

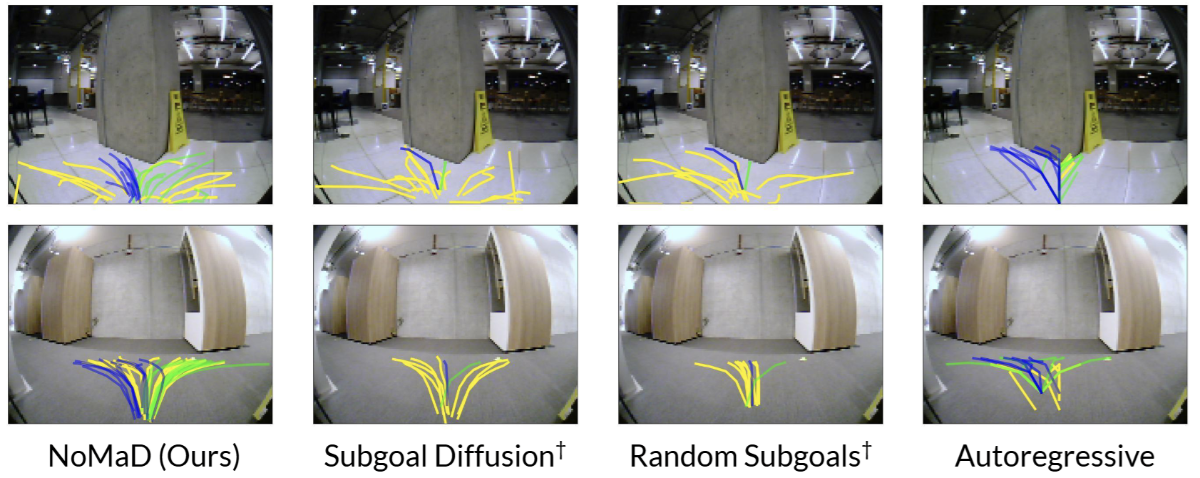

NoMaD [3] and the Conditional Diffusion Transformer [4] employ the diffusion policies as their NWM architectures. NoMaD models action planning as sampling an entire action sequence \(a_{1:H}\) conditioned on the current state embedding \(s\) and a goal masking \(g\). Formally, at inference it draws

\[a_{1:H} \sim p_\theta\bigl(a_{1:H} \mid s, g\bigr),\]where \(p_\theta\) is parameterized by a Transformer-based diffusion network. During training, NoMaD corrupts ground-truth sequences with noise and trains a Transformer-U-Net to reverse that corruption, learning to denoise action trajectories step by step. At test time, it starts from random noise and runs the learned reverse-diffusion process inside the Transformer to generate coherent, multimodal plans that either navigate toward a goal (when \(g\) is the target) or explore freely (when \(g\) is masked).

By setting different goal masks, NoMaD can produce multiple action sequences in parallel that approximate multimodal action distributions. Generating multiple trajectories in each step helps the robot balance safe navigation and environment exploration.

NoMaD generates multimodal undirected predictions in each step. It generates safer trajectories for each goal compared with NWM baselines.

The Conditional Diffusion Transformer employs diffusion in a way that differs from NoMaD. Rather than generating action sequences, it predicts entire future observation trajectories in a learned latent space. It encodes each frame into a compact feature vector with a pretrained VAE and then uses a large Transformer-style diffusion network to simulate entire future trajectories. At planning time, these imagined feature sequences are scored by a goal similarity metric with a safety criterion, and Model Predictive Control picks the best candidate.

The Conditional Diffusion Transformer method solves the tasks with complex state and action constraints. Because it generates full observation rollouts in latent space, it can enforce that not only the endpoint but also the entire imagined trajectory matches specified requirements. High-quality camera-view predictions help impose state-based constraints such as following a reference path or keeping away from obstacles. In contrast to simpler world-model baselines that only check whether the final state reaches the goal, Conditional Diffusion Transformer lets planners score and filter trajectories based on detailed, frame-by-frame similarity to a desired path.

The Conditional Diffusion Transformer method helps the robot follows the input trajectories safely in complex outdoor environments.

We summarize the key aspects of the four methods in the following table.

| Model | Architecture | Best Task(s) | Input / Output | Key Technique |

|---|---|---|---|---|

| GNM [1] | CNN policy + Embedding table | Image-goal, waypoint follow | In: image frame, goal image, robot ID Out: relative waypoint + yaw change |

Relative waypoints + embodiment context |

| ViNT [2] | Transformer (31 M params) | Long-distance navigation | In: image frame, goal prompt token Out: next waypoint + residual distance |

Prompt-based goal embedding; dual prediction |

| NoMaD [3] | Transformer-based diffusion | Exploration & goal navigation | In: state embedding, goal mask Out: full action sequence (length H) |

Conditional diffusion policy |

| Conditional Diffusion Transformer [4] | VAE + Transformer diffusion | Constrained path following | In: current latent, goal / constraint embedding Out: future latent trajectory |

Latent video prediction; MPC reranking |

Limitations of NWMs

Despite their strong results, current Navigation World Models share a number of shortcomings. First, they all depend on massive, diverse video and action datasets; collecting and curating this data at scale remains costly, and domain gaps between web or human footage and the robot’s deployment environment can hurt performance. Second, models based on diffusion (NoMaD and the Conditional Diffusion Transformer) suffer from poor inference efficiency: generating and denoising entire action sequences or future latent trajectories requires dozens to hundreds of network evaluations per planning step, making real-time control on resource-constrained robots challenging. Third, purely policy-based methods like GNM and ViNT are limited by their fixed action vocabulary and prediction horizon. GNM handles only image-goal and waypoint tasks out of the box, leaving open-ended exploration to auxiliary modules, while ViNT’s implicit self-correction can still drift in severely cluttered or feature-sparse scenes and must rely on external subgoal planners for kilometer-scale journeys.

Conclusion and Future Work

Navigation World Models offer a powerful way to unify perception and planning by learning to predict future observations or actions from raw visual inputs. Across GNM, ViNT, NoMaD, and Conditional Diffusion Transformer, we see methods that generalize across robot types, handle long-distance goals, sample diverse exploration paths, and enforce complex trajectory constraints. Each achieves strong results in its niche, but all share challenges around data scale, inference speed, and error accumulation. Future work would focus on making diffusion-based methods practical on the small robots demanding fast inference efficiency. Better techniques for domain adaptation and online correction can reduce compounding errors in new environments.

References

[1] Shah, Dhruv, et al. “Gnm: A general navigation model to drive any robot.” 2023 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2023.

[2] Shah, Dhruv, et al. “ViNT: A foundation model for visual navigation.” arXiv preprint arXiv:2306.14846 (2023).

[3] Sridhar, Ajay, et al. “Nomad: Goal masked diffusion policies for navigation and exploration.” 2024 IEEE International Conference on Robotics and Automation (ICRA). IEEE, 2024.

[4] Bar, Amir, et al. “Navigation world models.” Proceedings of the Computer Vision and Pattern Recognition Conference. 2025.

[5] Sandler, Mark, et al. “Mobilenetv2: Inverted residuals and linear bottlenecks.” Proceedings of the IEEE conference on computer vision and pattern recognition. 2018.